Sakana AI TreeQuest: LLM Teamwork Boosts Performance

Collaborative use of large language models (LLMs) significantly boosts team performance and complex problem-solving, enabling organizations to achieve higher efficiency, creativity, and innovation compared to individuals or teams without AI support

Japanese research company Sakana AI has introduced TreeQuest, an innovative framework that orchestrates multiple large language models (LLMs) to collaborate on complex tasks, achieving up to 30% performance improvement compared to individual models working alone, as reported by VentureBeat.

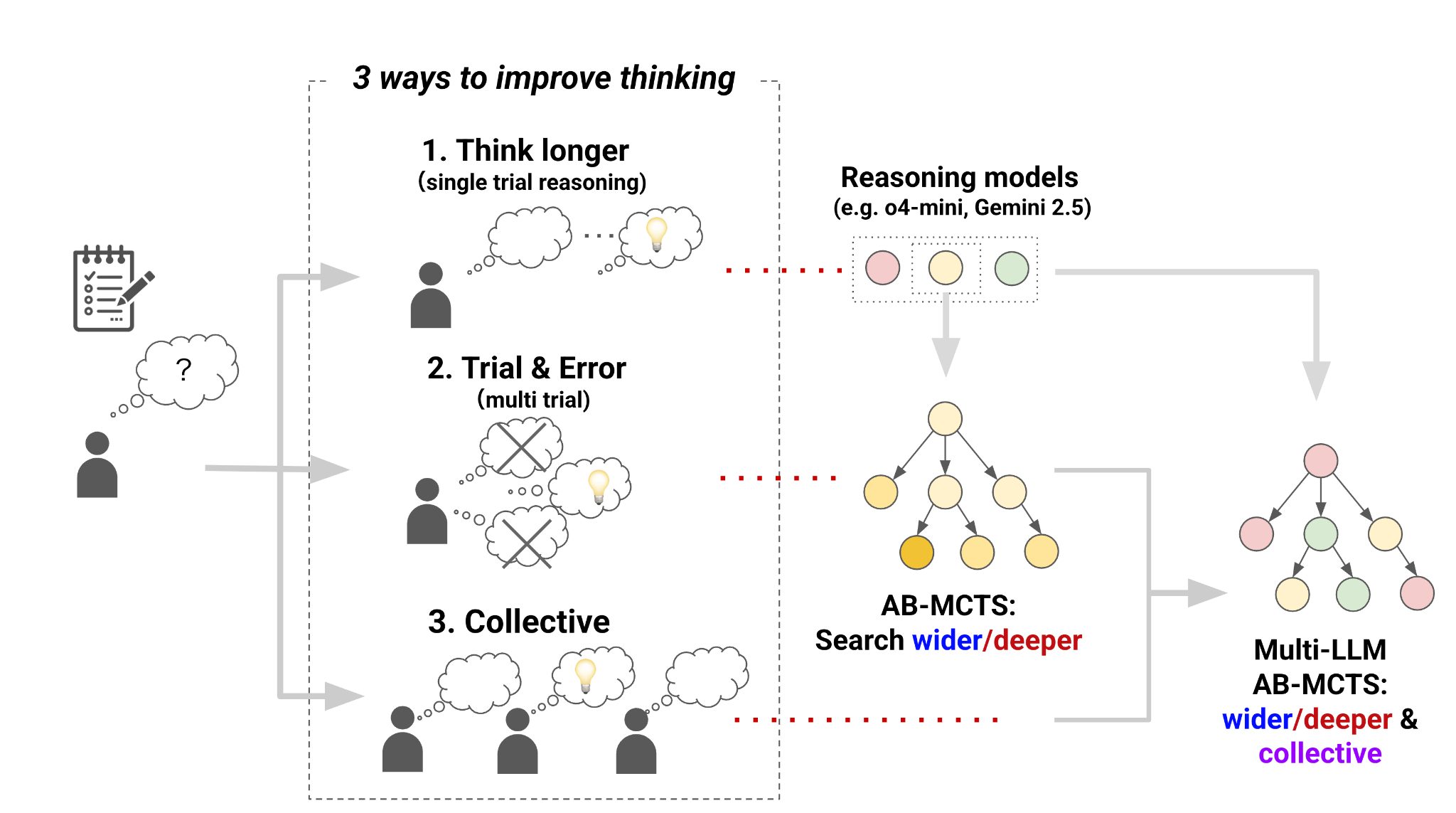

At the heart of TreeQuest lies the Multi-LLM AB-MCTS (Adaptive Branching Monte Carlo Tree Search) algorithm, which introduces a revolutionary approach to AI problem-solving. This inference-time scaling technique enables multiple frontier models like OpenAI's o4-mini, Google's Gemini 2.5 Pro, and DeepSeek's R1 to function as a cohesive team.The algorithm makes strategic decisions at each reasoning step: whether to explore deeper by refining a promising solution, search wider by generating new solutions, or—uniquely—which specific AI model should handle the current subtask.

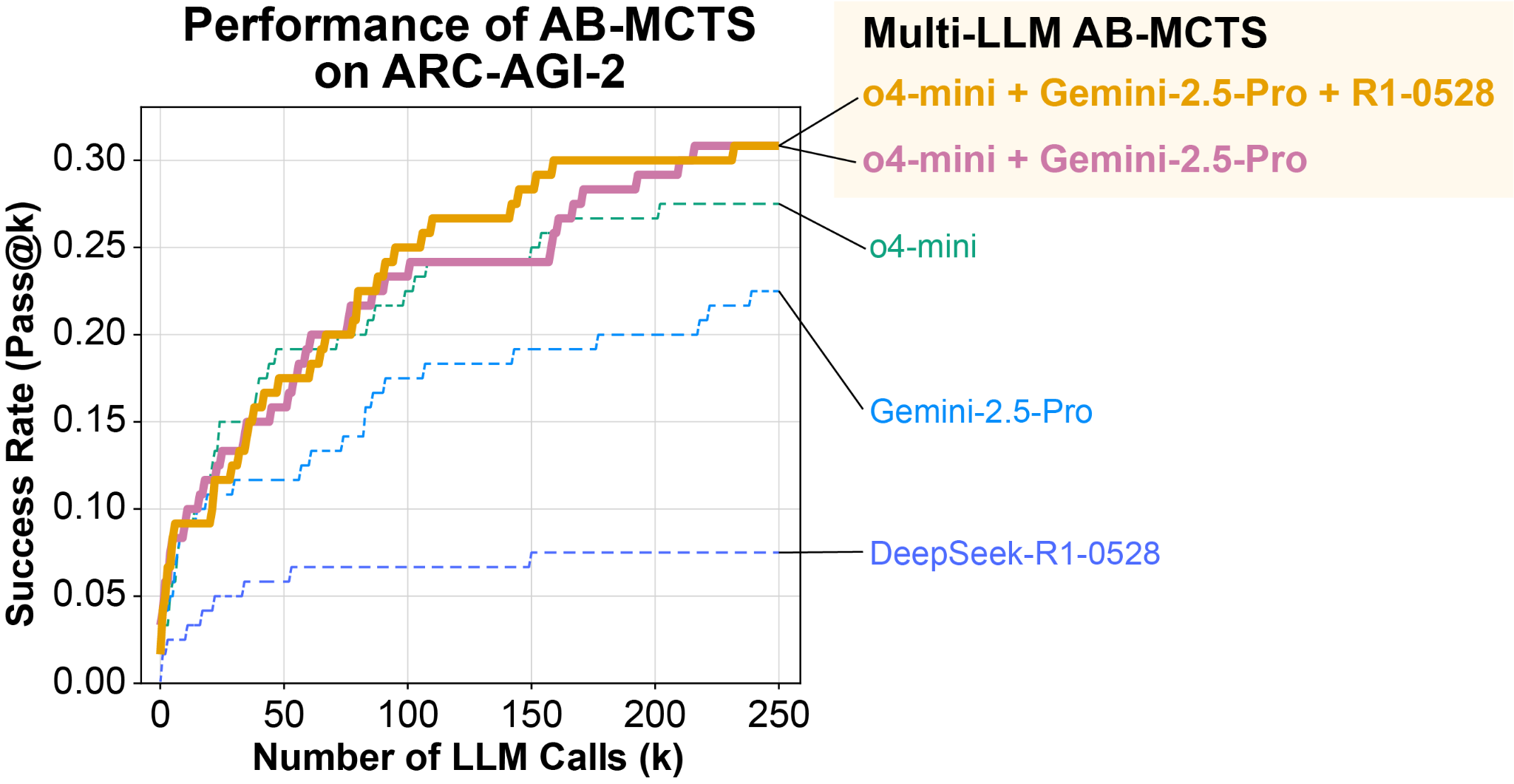

The system begins unbiased but learns to favor models that historically perform better on certain subtasks, effectively creating a dynamic ensemble that leverages each model's strengths. When tested on the challenging ARC-AGI-2 benchmark, this collaborative approach solved over 30% of the test problems—significantly outperforming any individual model. Most impressively, the system successfully tackled problems that were previously unsolvable by any single model, with instances where one model's errors were identified and corrected by others in the ensemble. Sakana AI has open-sourced this algorithm as TreeQuest under the Apache 2.0 license, making it accessible for commercial applications.

TreeQuest's collaborative AI approach delivers a remarkable 30% performance boost over individual models working alone. This significant improvement stems from the system's ability to combine the unique strengths of different models while minimizing their individual biases and weaknesses. When tested on the ARC-AGI-2 benchmark, the results were compelling – OpenAI's o4-mini solved 23% of problems independently but reached 27.5% accuracy when operating as part of the TreeQuest ensemble.

The performance gains are particularly evident in complex problem-solving scenarios. In one notable case, researchers observed a previously unsolvable problem being resolved when an incorrect solution from o4-mini was passed to DeepSeek-R1 and Gemini-2.5-Pro, which identified the error, corrected it, and produced the right answer. This demonstrates how TreeQuest's multi-model approach not only improves efficiency but also expands the boundaries of what AI systems can accomplish through collective intelligence – addressing challenges that would remain beyond the reach of even the most advanced individual models.

Adaptive Branching Monte Carlo Tree Search (AB-MCTS) represents a breakthrough in LLM inference-time scaling by dynamically balancing exploration and exploitation during problem-solving. Unlike traditional approaches that either generate multiple answers (going "wider") or refine existing solutions (going "deeper"), AB-MCTS makes principled decisions at each node of the search tree about which strategy to pursue based on external feedback signals. This framework employs Bayesian decision theory and Thompson Sampling to determine whether to expand with new candidate responses or revisit promising existing ones, effectively combining the benefits of repeated sampling with multi-turn solution refinement.

The algorithm constructs a search tree where each node corresponds to an input text and its LLM-generated output, with expansion from the root generating direct answers while expansion from other nodes refines previous solutions. When evaluated on complex coding and engineering tasks using frontier models, AB-MCTS consistently outperformed both repeated sampling and standard MCTS approaches, particularly as computational budgets increased. This adaptive approach addresses a key limitation of traditional methods that use fixed "width" parameters, allowing AB-MCTS to leverage the vast output space of LLMs while intelligently allocating computational resources to the most promising solution paths.